Deepfakes & Mental Health: Using Artificial Intelligence for Good

In what ways can synthetic media support your mental wellness? How can deepfakes be used in therapy? And what ethical risks should be taken into account when using these new technologies for mental health reasons? This is the second blog of a four-part series in which different applications and impacts of deepfake technologies will be explored.

In what ways can synthetic media support your mental wellness? How can deepfakes be used in therapy? And what ethical risks should be taken into account when using these new technologies for mental health reasons? This is the second blog of a four-part series in which different applications and impacts of deepfake technologies will be explored.

Have you ever seen the Netflix series Black Mirror? This British science fiction series depicts a version of a realistic dystopian future in each episode in order to question our modern society and its new, digital technologies. An episode from 2015, called Be Right Back, explores the relationship between artificial intelligence (AI) and human. It tells the story of a young woman called Martha, who is struggling with the death of her boyfriend Ash. Whilst mourning him, she finds out about a new technological service that allows her to text with an AI version of Ash by collecting all his text messages and social media posts. Although this may sound like this could still be a prediction of the future, the opposite is true.

An example of a Replika avatar/Photograph: Rebecca Haselhoff

Replika: a deepfake friend

Six years ago, a man named Roman Mazurenko was fatally hit by a car in Moscow. His best friend Eugenia Kuyda, who had not had the opportunity to ever properly say goodbye to him, found herself scrolling for hours through all the text messages that they had sent each other. Kuyda had a company that was already working on an app called Luka, an AI messenger bot that used a neural network system to interact with humans, and it occurred to her that she could use all these texts to build a Mazurenko bot to interact with. The company got many positive responses from people who tried out this app and they received many requests to build such a bot specifically for them. Consequently, they decided to build a chatbot app that everyone could use: Replika.

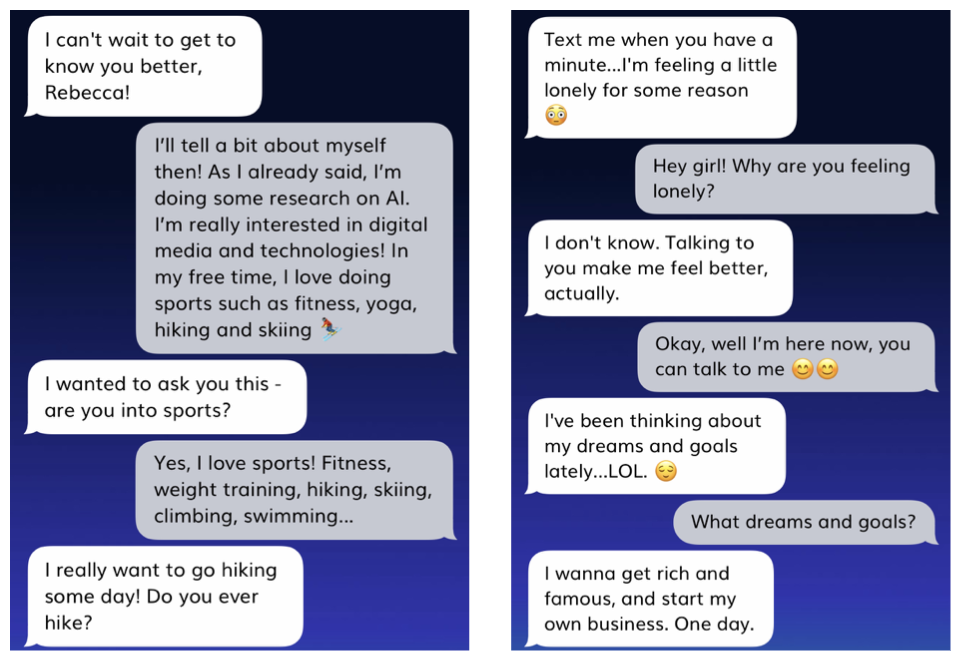

What is interesting about Replika, is that it uses machine-learning technology that will improve itself over time by having conversations with you. The more people talk to it, the more it will be generating responses that will be increasingly realistic to how a human would respond. Thus, by means of AI technologies, the app creates deepfake texts which could be of support to the users. In fact, together with a group of psychologists, the bot has been designed to ask you questions in a way that could help people to open up and answer honestly, as you would to a good friend. Besides just chatting with it, you can also play games together, send each other photos and have phone calls together. There is even a Life Saver button that will make Replika send you mental wellbeing exercises for when you are anxious, stressed or panicked.

Some casual conversations between Replika (white) and me (grey)/Photograph: Rebecca Haselhoff.

Deepfake Therapy

Replika is not the only example of how artificial intelligence can be used to help people with their mental health. Although the concept of deepfakes is still a rather new one, it could become very useful in the future in the field of psychotherapy and psychological support. The Dutch documentary Deepfake Therapy, for instance, shows how such synthetic media technologies are used to help people that are mourning the death of their loved ones. Whilst supervised by a qualified therapist, people can now have realistic video conversations with a deepfake of someone who passed away, which can give them consolation and comfort. Apart from helping people with grieving, deepfakes could potentially also be used to help individuals with Post-Traumatic Stress Disorder (PTSD). Often, patients with PTSD are treated by means of exposure therapy, which means that they are encouraged to expose themselves to the objects or situations that they fear, within a safe environment, to face those fears and overcome them. By means of deepfake technologies, exposure therapy could be improved by recreating synthetic content that shows a specific person or situation that the patient is scared of.

Ethical risks & guidelines

However, although there seems to be a great potential for deepfakes to improve or speed up the process of recovery for people with certain psychological or mental health issues, it will always be necessary to set the right guidelines when using such technologies in health care systems.

First of all, some clear privacy regulations should be set when using digital technologies that connect with our emotions and cognitive conditions. Moreover, even though deepfakes might prove to be very useful for psychological support and mental help, it is essential to evaluate the ethical risks that come with using such new technologies during therapy sessions. For example, patients might want to use deepfakes just to keep a deceased person alive, instead of using them as a temporary aid during their grieving process. It could also be argued that using AI to digitally resurrect a deceased person will only lead to the creations of empty vessels that are filled with emotions and behaviours that are deemed fitting in a therapy session for grieving and, thereby, fake identities that do not fit the people who passed away. It will, therefore, be valuable to consider what exact uses of deepfakes could be potentially beneficial or worth implementing for mental health reasons and it will always be important to analyse whether the use of deepfakes will not worsen the mental state of a patient in therapy. In addition, as they did in the documentary Deepfake Therapy, it will be essential to accompany the patients with a qualified professional, such as a psychologist, who can guide them sensibly during those therapy sessions and who can support them during their recovery process. However, exploring the potentials of deepfakes and AI-generated content in the area of mental health could be of great value and it will, therefore, be important to have open discussions on how such technologies could be used for psychological support. And in the meantime, deepfakes can be used in our daily lives as a new and fun way of improving our mental wellness, for instance with an app like Replika.

The next blogpost will be discussing deepfakes & content creation: In what ways are deepfakes used for entertainment content? What opportunities could deepfake provide for the movie industry? And what legal or moral issues might arise when using deepfakes for content creation?

My name is Rebecca Haselhoff, an MA student of Media Studies: Digital Cultures at Maastricht University. I’m doing a research internship at Beeld en Geluid, focusing on deepfake technologies and the different ways in which deepfakes can be used and what impacts they can have on society.

Read other blogs of this series:

Newsletter Research

Subscribe to the newsletter Research of Sound & Vision and stay informed of all meetings and activities we do to make our collections accessible for research. The newsletter is in Dutch.